Automating the search for the fairest model

Heading into 2021, banks and other lenders are all pledging to do more to solve economic and racial inequities in financial services. It’s long overdue but the return on that necessary investment could be immense. A recent report from Citigroup revealed that providing fair and equitable lending to Black entrepreneurs might have resulted in the creation of an additional $13 trillion in business revenue and potentially created 6.1 million jobs per year over the past 20 years.

Consumers are demanding change, too. A recent Harris Poll revealed that half of Americans think the lending system is unfair to people of color and that 7 out of 10 Americans would switch financial institutions to one that had more inclusive practices. Recognizing that the status quo isn’t working, financial institutions and credit unions are evaluating new approaches and technology to break the cycle.

The path to increased financial inclusion begins with identifying what’s wrong with the status quo and understanding the new approaches and technology to lend more inclusively without taking on added risk.

Fair Lending Catch-22

Despite best efforts, many lenders still struggle with the balancing act of making underwriting models accurate and fair. Traditionally, there has been a trade-off between accuracy in risk prediction and the goal of minimizing bias and disparate impact. Finding the source of bias in a traditional linear regression lending model is not terribly hard. The hard part is getting rid of that bias without wrecking the accuracy of the model. Why? The techniques used to generate less discriminatory alternative models force lenders to drop crucial variables to improve fairness. As a result, those variables end up back in the model due to business justification reasons. The needle has failed to move on inclusion. With the shift to ML underwriting, lenders have an arsenal of new techniques to mitigate protected-class bias in any lending model with no hit to accuracy.

Machine Learning Breaks the Cycle

Zest AI has patented a technique that automates this search for less discriminatory alternative models without introducing protected-class data to train the model and thereby introduce compliance risk. Zest’s LDA search uses an ML technique called adversarial de-biasing that pairs a credit risk model tuned for maximum accuracy with a companion model that tries to guess the race of the borrower being scored. If the approvals correlate strongly with race, the companion model will pass back instructions that adjust the risk model in minute ways across the entire set of variables. Think of it as a fairness mixing board in a recording studio where all the settings adjust on their own to reduce disparity in approval rates with little to no impact on accuracy.

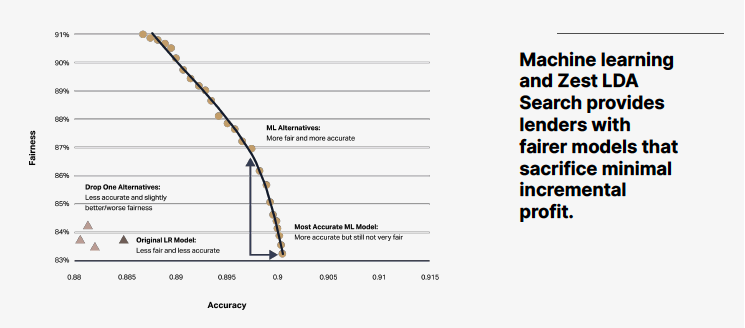

The graph above illustrates how lenders can now have real options for fair lending. The y-axis represents fairness, measured by the Adverse Impact Ratio. The x-axis represents the accuracy of the underwriting decision, measured by the area under the ROC curve, a standard measure of predictive power in data science. The original logistic regression model sits on the lower left of the chart, along with the less discriminatory alternatives generated by traditional techniques such as “drop one.” They show a big hit to performance for not much gain in fairness. A Zest-built ML model starts out as a far more accurate model, and then Zest’s automated LDA Search produces a series of fairer alternatives (circles) that allow lenders to dramatically reduce disparate impact for only a minor reduction in incremental profit.

ML-powered fair lending finally offers lenders real choices. One auto lender model achieved a 4% increase in approvals for African-Americans for a mere 0.2% drop in performance (loosely translated: about two bucks in incremental profit per loan). A bank that offers personal loans achieved a 6% increase in approvals for borrowers of color for a mere 0.1% drop in performance.

And, because of the companion model approach to de-biasing, these gains are achieved without considering protected status as an input in the original risk model. Fairness is considered after the initial model is created. This has the effect of directing the modeling process toward a fairer model without violating regulatory prohibitions on disparate treatment. Lenders can choose the extent to which fairness enters into the process, develop many alternative models, and select one for use in production.

What these results show is that the double-optimization of ML models gives lenders better alternative models to choose from. Some lenders may select the most accurate ML model, but when presented with more choice, many lenders we work with are selecting models that cause less disparate impact.

Conclusion

Financial institutions are uniquely positioned to break the cycle of racial and economic inequality. The good news is that there are innovative technologies and techniques that enable banks and credit unions to unlock the marginalized and “credit invisible” markets, generating more opportunity for consumers and ultimately a more inclusive credit economy.

Check out an additional resource to jumpstart your fair lending strategy:

Whitepaper: Path to a Fairer Credit Economy